Hi,

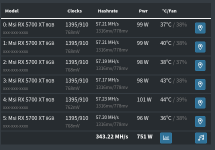

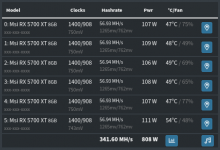

I am failing to update Gigabyte 5700 XT. The same procedure updates the BIOS on MSI cards with no problem. I follow the instructions on Igors Lab.

The sign of having trouble with the card bios flash shows in GPU-Z. After flash using amdvbflash the values of GPU and memory frequencies is empty. With stock bios there are Mhz frequencies.

Is there any known problems with flashing Gigabyte cards?

Any suggestions on what other forums I may ask for assistance?

Is it possible that the OEM bios is signed, and any modified bios will be rejected?

If the bios is signed, any tools to resign, or would I need to buy a card from a different vendor?

Thanks,

Alley Cat

I am failing to update Gigabyte 5700 XT. The same procedure updates the BIOS on MSI cards with no problem. I follow the instructions on Igors Lab.

The sign of having trouble with the card bios flash shows in GPU-Z. After flash using amdvbflash the values of GPU and memory frequencies is empty. With stock bios there are Mhz frequencies.

Is there any known problems with flashing Gigabyte cards?

Any suggestions on what other forums I may ask for assistance?

Is it possible that the OEM bios is signed, and any modified bios will be rejected?

If the bios is signed, any tools to resign, or would I need to buy a card from a different vendor?

Thanks,

Alley Cat

Zuletzt bearbeitet

: